The do’s and don’ts in behavioral testing: improve your open field test

Scientists have been performing open field tests for quite some time now. Over the years it has become one of the most popular tests in rodent behavioral research. So what’s not to love?

Posted by

Published on

Thu 05 Jun. 2014

Topics

| EthoVision XT | Mice | Open Field | PhenoTyper | Rats | Video Tracking |

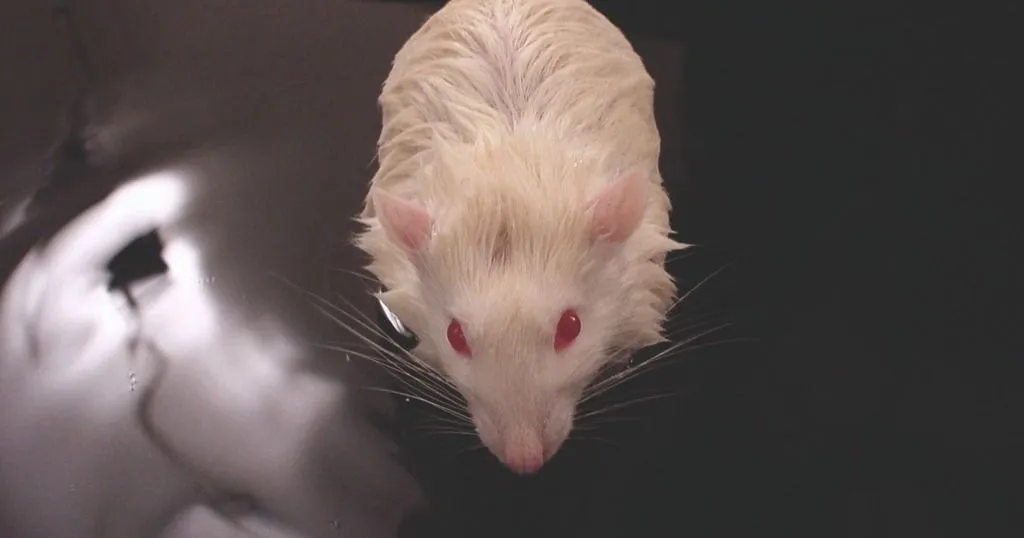

Scientists have been performing open field tests for quite some time now. For eighty years, to be exact. And over the years it has become one of the most popular tests in rodent behavioral research, as it allows the researcher to study (locomotor) activity, exploration, and anxiety all in one test environment. So what’s not to love? It’s easy, short, and straight-forward. Plus, it is a highly validated test…or is it?

In their recent review in Journal of Neuroscience Methods, Berry Spruijt (Professor of Ethology and Animal Welfare, Utrecht University, the Netherlands) and his colleagues tell us that, like most of the popular classical tests, it is actually not well validated. This is a well-known problem in behavioral research. In fact, specific for the open field test, there seems to be a lack of reliability and validity. Despite all the efforts labs put into standardizing their methods and procedures, there is still a great amount of variability in behavioral results within and across laboratories.

Berry Spruijt and his co-workers have been drawing our attention for many years now to the limitations of the classical behavioral tests and the necessity of using more advanced behavioral methods and technologies. This ambition has even led to the establishment of an innovative contract research organization specialized in animal behavior, called Delta Phenomics. Although most of the classical tests in principle are not bad, there are a few important issues that should be considered when one wants to use them well.

An open field is not just an open field

Let’s start with the obvious: the open field apparatus itself. What is the first image that pops into your head? Is it a round open field? Or square? How big is it? Does it have opaque walls and how high are they? How are the measures taken: via video-tracking, infrared beams, or with a hand-held stop watch? Is the test conducted during the dark (active) or light (inactive) phase? Ask these questions across five different laboratories, and you will probably come across at least five different open field set-ups. Clearly there is little to no consensus there. Even the definition of an inner and outer zone in the arena (used to study behaviors such as thigmotaxis) is not clear-cut. So, how can we compare results of different studies when there is already such variability in the apparatus and test set-up itself?

Reading too much into the results

The very fact that the open field seems to be such an easy, short, and straightforward test might actually be its downfall. Over the years people have started using open field testing for the wrong reasons and/or have become too liberal when drawing conclusions from the data. As Spruijt and his colleagues mention, people generally tend to use behavioral tests for two different purposes. On the one hand, one applies a test to answer a limited, but clear question: for example, dose-response testing in pharmacological studies. The behavioral readouts are used as simple quantitative measures. On the other hand, one can conduct a test with the aim to assign a biological function to the behavioral changes observed as a consequence of the experimental manipulation (e.g. an altered genome).

According to Spruijt et al. the classical tests (like open field and elevated plus maze) are sufficient for the first research purpose. However, understanding the biological function of behavior requires more. This is where things have gone wrong: people have started using tests appropriate and valid for answering the straightforward questions for more hypothesis-free testing purposes. For instance, the time the animal spends in the center of the open field arena is generally used as a measure of anxiety, because anxiolytic drugs typically increase this measure. However, when any given manipulation happens to enhance the time spent in the center, this does not automatically mean that it is anxiolytic. As Spruijt and his colleagues point out we should be careful with these kind of quick and oversimplified interpretations.

Simplistic or no automation

What clearly does not help is the continuous use of simplistic automated tools (if they are used at all), such as activity measurements with infrared photo beams. Although these tools are preferred above manual scoring of behavior, which is still frequently used, these techniques are limited to general activity of the animal (such as distance moved and time spent in particular areas (zones) in the open field). Moreover, tests tend to be relatively short (5-60 minutes), meaning that they basically only measure reactions to novelty.

The choice for these tools is particularly striking as innovation is not the limiting factor here. In fact, available technology now supports a wide range of movement and activity measurements, such as path lengths, kind/number of zones visited, the number of turns the animal makes, etc. In addition to movement-related parameters, quantitative behavioral events can be automatically measured, such as rearing and grooming. Also, the possibilities to analyze this growing amount of data are up to par. So why is it that the methods used in the majority of behavioral research studies seem to have frozen in time?

Behavior is what you see?

The answer to this question is twofold. First, when it comes to observing behavior, we humans think we know better than computers. While genes, molecules, and action potentials are things we cannot see and we obviously need advanced technology to measure them, the added value of innovative technology in measuring behavior has been underestimated for years, explains Spruijt et al. We can simply see an animal’s behavior, so why do we need computers for the job?

The danger here is that we think we know what we see, and what it means. However, we do not see everything. The automatic – and thus objective – way in which technology allows us to gather behavioral data, allows us actually to see more. Additionally, and maybe even more importantly, animal behavior is much more complex than we are aware of. Behavior is like a language. It does not merely exist of behavioral elements (the ‘words’), but also the order of the elements (the ‘syntax’) has meaning. This order, however, usually remains unnoticed by the human observer, especially across longer observation times. To understand behavior, and grasp its true meaning, we will need to rely on advanced technology that can analyze the frequency and durations of the behavioral elements, as well as the sequential organization of behavior.

Second, researchers also seem reluctant to switch to new technology. A huge amount of literature represents ‘the old ways’ and researchers hold on to these, even when they might be wrong. Most of this referenced data, generated over decades of research, serves as ‘backbone’ for new developments. According to Spruijt et al. this is a difficult circle to break.

How to move on to better things

In their article, Spruijt and his colleagues propose a list of solutions and implementations to improve the validation of the classical tests in behavioral research, in particular open field testing. You can read about this in our next post, to be posted here on this blog next week.

Curious? Check out the publication yourself, right now!

Spruijt, B.M.; Peters, S.M.; Heer, R.C. de; Pothuizen, H.H.J.; Harst, J.E. van der. (2014). Reproducibility and relevance of future behavioral sciences should benefit from a cross fertilization of past recommendations and today’s technology: “Back to the future”. Journal of Neuroscience Methods, article in press, doi:10.1016/j.jneumeth.2014.03.001.

Related Posts

5 reasons that prove why a high-quality camera is essential for video tracking

Many ways to measure behavior