Predicting Advertising Effectiveness: Facial Coding of 120.000 Video Frames

The advertising and marketing companies have just received a new addition to their repertoire of the neuromarketing tools – automated coding of facial expressions of basic emotions.

Posted by

Published on

Thu 06 Jun. 2013

The advertising and marketing companies have just received a new addition to their repertoire of the neuromarketing tools – automated coding of facial expressions of basic emotions.

Three researchers from the Amsterdam School of Communication Research (ASCoR) at the University of Amsterdam (UvA) have demonstrated that the specific patterns of facial expressions partially explain the advertisement’s effectiveness in the amusing persuasive video stimuli [1].

This study was conducted on a crowdsourcing platform – yielding more than 120.000 frames of facial video-reactions, which in turn were analyzed within a few hours by a facial coding system called FaceReader [2].

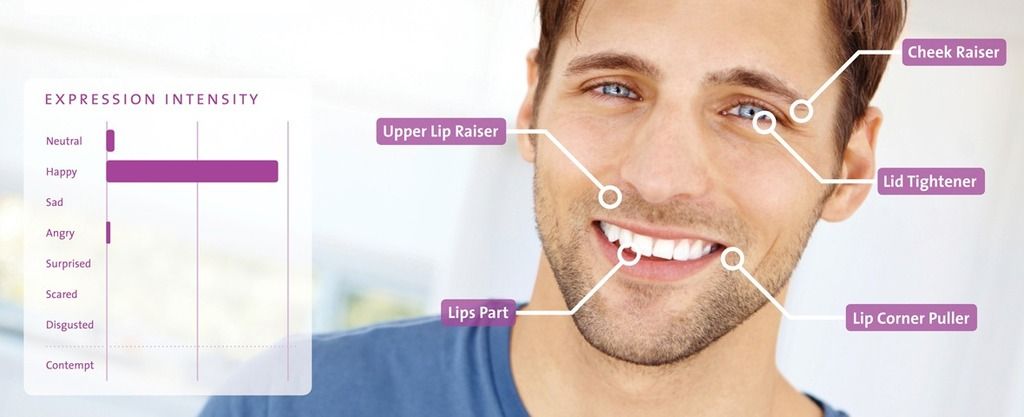

Automatic analysis for facial expressions

The software allows automatic analysis for facial expressions of happiness, surprise, sadness, fear, anger, and disgust in a fraction of the time that it would take a team of the independent human facial coders. In the past, it could have taken up to two or three months of tedious manual coding.

The study was published in the conference proceedings of 9th Annual NeuroPsychoEconomics Conference, with a theme “Bridging Boundaries: Applying Neuroeconomics to Medicine, Social Science and Business,” on 6th June, 2013 in Bonn, Germany by Association for NeuroPsychoEconomics.

In the study, ninety U.S. based participants were asked to watch three advertisements that differed in how amusing they were while their facial reactions were video-recorded. After being exposed to the video, they were asked to fill out a self-report on how much they enjoyed the ad and if they liked the presented brand.

Facial reactions vs. self-report

The researchers - using FaceReader – have analyzed the underlying patterns of the facial reactions and related them to the self-reported scores of the advertisement’s effectiveness.

Excitingly, they found that the scores on the facial expressions of happiness explain as much as 37% of the variance in the self-reported scores of the attitude toward the ad – the core measurement of the advertisement’s effectiveness – in the most amusing video ads.

Happiness explained attitude toward the brand

Although the other basic expressions did not contribute to predicting attitude toward the advertisement and the brand in the amusing persuasive stimuli, happiness did partially explain attitude toward the brand. Furthermore, FaceReader could have reliably distinguished between high, medium, and low amusing persuasive video advertisements.

Importantly, the researchers have also come up with a novel way of linking the FaceReader’s continuous interpretation of the subjects’ facial expressions with a punctual, static, dependent variable that is the advertisement’s effectiveness. However, they also argue that more research is needed, especially testing, if other types of affective stimuli (e.g. gloomy, disgusting, scary, etc.) can be related to constructs typically studied in the advertising research (such as intention to purchase or the actual buying behavior). They also highlight the importance of the cross-validation of FaceReader with other commonly used measures such as AdSAM®, PrEmo or the facial EMG.

Conclusion

This recent study contributes to the growing field of the affective consumer neuroscience, analyzing important data of facial reactions to predict the advertising effects.

The experiment strength was the use of the crowdsourcing platform, where it was possible to record the participants in their natural environment, their homes and offices – the usual places where the consumers watch the persuasive video materials. The material gathered through such platforms is often of poor quality, as it is not possible to control perfectly for the recording conditions as in a laboratory settings. The poor lighting conditions and technical failures on the part of the end-user made it exceptionally hard to analyze the collected material.

However, FaceReader has proved to handle this impoverished data exceptionally well, and allowed the researcher to perform a fast, reliable, and automated analysis of facial expressions and basic emotions.

By Peter Lewinski

PhD Candidate – Amsterdam School of Communication Research, UvA

Marie Curie Research Fellow – Vicarious Perception Technologies, B.V.

Early Stage Researcher - CONsumer COmpetence Research Training, FP7

- P. Lewinski, E.S. Tan & M.L. Fransen (2013). Predicting advertising effectiveness by facial expressions in the amusing persuasive stimuli. In B. Weber, M. Reuter, A. Falk, M. Reimann & O. Schilke (Eds.), 2013 NeuroPsychoEconomics Conference Proceedings (pp. 45-45). Bonn, Germany: Association for NeuroPsychoEconomics. ISSN: 1861-8243 Databases: EBSCO, Review: double-blind, peer-review.

- Noldus. (2013) FaceReader: Tool for automatic analysis of facial expression: Version 5.0.15. Wageningen, The Netherlands: Noldus Information Technology B.V.

Related Posts

8 reasons why FaceReader outperforms OpenFace

3 Examples of pattern detection research