Saying Ouch Without Saying It: Measuring Painful Faces

What happens when we’re in pain, real physical pain, but we cannot tell someone where or how badly it hurts? We can look at the facial expression!

Posted by

Published on

Mon 02 Mar. 2020

Topics

| Emotions | FaceReader | Facial Expression Analysis | Measure Emotions |

Unlike the song by Three Days Grace1, I am not a fan of pain. Call it Epicurean, call it hedonistic, whatever, I don’t want to experience pain, either psychological or physical. In the past two months I lost a grandfather to dementia and a sister to a unfathomable act of violence.

In the past three years I have been to more funerals than in my previous thirty-eight years combined. So yeah, I’m not a fan of pain. I don’t like it when I stub my toe or twist my ankle, and I really don’t like seeing a lifeless family member lying in a box.

But it happens and you deal with it, either by relying upon your support network for emotional/psychological pain, or with something pharmacological for physical pain. This can be a slippery slope, of course, as opioid-abuse has reached epidemic status, particularly here in Southwestern Ohio2,3.

There are some interesting neural mechanisms for this, which I won’t go into here, but if interested I encourage you to check out some of my peer-reviewed published materials4.

Non-verbal behavior instead of verbal behavior

What happens when we’re in pain, real physical pain, but we cannot tell someone where or how badly it hurts? For example, the last few weeks of my grandfather’s life, he was non-verbal. First went English, then his native German, with the only remaining communication done in grunts, but only on some days.

So how can his hospice care know if my Opa is in pain when he can’t express that he’s hurting? I assume you read the title, so spoiler alert: we can look at the facial expression.

Not limited to basic facial expressions

By now we all know FaceReader. It automatically analyzes facial expressions based upon the ‘basic’, universal expressions. However, a recent update to FaceReader now allows researchers the opportunity to design your own algorithms by combining variables such as facial expressions, action units, and heart rate to create “Custom Expressions”.

What this means is that we can create our own states and attitudes. We don’t have to limit ourselves to happy or sad, but can expand our research horizons, so to speak. Now, let’s get back to the topic at hand, introduced by Mr. T’s Clubber Lang: pain5.

Oh wait, let’s back up one more second. Remember that in addition to the basic emotions, FaceReader can also classify twenty individual muscles, known as Action Units (AUs). The combination of AUs around the eyes and mouth creates the emotions we know and recognize. For example, smiling uses the cheek raiser, orbicularis oculi (pars orbitalis) - AU6, and the lip raiser, zygomaticus major - AU12. Action Unit 4, the brower, is involved in a host of emotions: anger, fear, concentration, and frustration.

If you remember previous posts, the dimpler, AU14, is heavily involved in contempt as well as fake smiles. This means that it’s not the individual muscles, per se, but the delicate interaction of all the muscles in our faces.

The identification of pain in non-verbal patients

Interestingly, pain is a really complicated expression. It involves a multitude of muscles, upon which some research agrees and others upon which there is disagreement (see review in Arif-Rahu & Grap, 20106). Recently, in collaboration with Dr. Thomas Hadjistavropoulos at the University of Regina7, we built a custom expression for the identification of pain in nonverbal ICU patients.

The algorithm is based on a number of factors. Let’s begin with the eyes. We start with AU9 and AU10, the lid tightener. Throw in AU4, the brower. Then look at the nose by adding AU10, the ‘wrinkler’.

Think about an unpleasant odor or something that disgusts you. For me, it’s a walk-in cooler full of every species of cockroaches on Earth. Yuck. Sorry, distracted. So once the nose goes, though, the upper lip is quick to follow. This is AU17.

The final act of the painful expression is the actual closing of the eyes, measured by AU43. Taken together you get a symphony of pain. Oh, that sounds like a great doom metal album.

Reaction of the face to pain

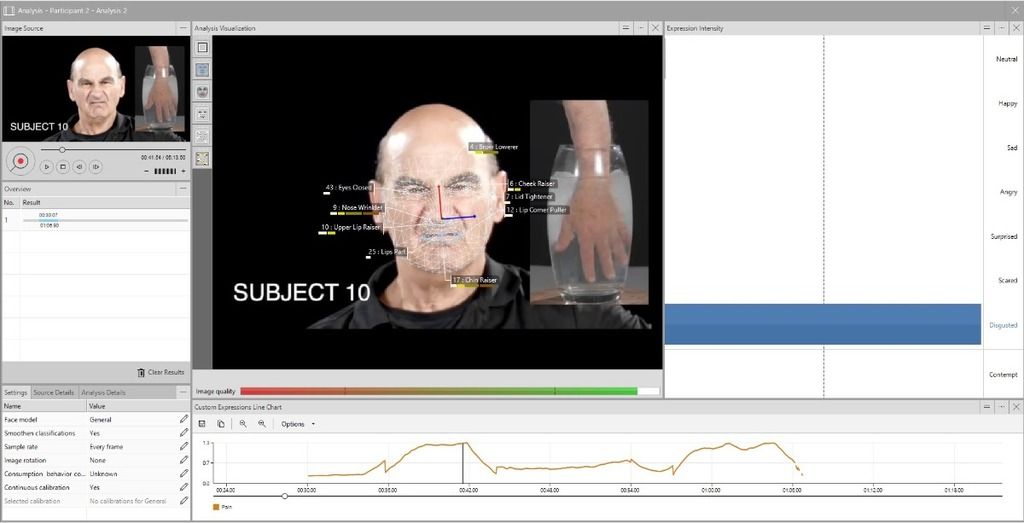

In order to test the new algorithm, I found a video on YouTube where people stuck their hands in ice cold water, a common test of pain8. As shown below, the algorithm works! Interestingly, if one were looking at the ‘basic’ emotions, one might say these people are disgusted or even happy, as the muscles for pain overlap with those individual expressions.

But taken together, we see that the ice water is, in fact, causing physical distress and their faces are reacting accordingly. Of course, at first there is a bit of surprise, as the jolt of temperature lights up the face. But once the nociceptors start feeling that ice cold water, you can see the painful expression rise.

Wanna try it for yourself? Feel free; take a video of yourself and send it to me*. I’ll analyze it for you, unless of course I open my big mouth and offer something that results in thousands of images being sent to me daily. If that happens, we might have to build a new website to let you try it yourself, kinda like we did for RBF9. If you’re feeling saucy and charitable, might I recommend the Polar Bear Plunge10.

Which emotions can FaceReader analyze?

Are there others, more complex emotions that FaceReader can analyze? Of course, hence the rhetorical question. Right now, FaceReader comes with an analysis of confusion, boredom, and interest. It would interest me to see your confusion or boredom while reading this. Too much? Ok, I’m almost done.

At any rate, the emotional landscape has opened well beyond the initial six expressions, and researchers now have a powerful tool to automate complex expressions. Drop us a line and let’s talk about what pains you in your research and how we can take the pain out of your facial expression analysis.

*As a post script, I don’t encourage you to record yourself getting seriously hurt. I was half-joking about the ice water, but that’s the full extent to which I want you to feel pain.

References

- https://youtu.be/Ud4HuAzHEUc

- https://www.uc.edu/about/opioidtaskforce.html

- https://www.wyso.org/post/major-mapping-cincinnatis-opioid-crisis

- Rogers, J.L., Ghee, S., See, R.E. (2007). The neural circuitry underlying reinstatement of heroin-seeking behavior in an animal model of relapse. Neuroscience, 151, 579-88.

- https://youtu.be/lSPNQ82Sq4E

- Arif-Rahu, M. & Grap, M. J. (2010). Facial expression and pain in the critically ill non-communicative patient: state of science review. Intensive & critical care nursing, 26 (6), 343–352. https://doi.org/10.1016/j.iccn.2010.08.007

- https://www.uregina.ca/arts/psychology/faculty-staff/faculty/hadjistavropoulos-thomas.html

- https://youtu.be/WtyUqwAod58

- www.testrbf.com

- https://en.wikipedia.org/wiki/Polar_bear_plunge

Related Posts

STAY AT HOME!

7 things you need to know about FaceReader