The role of facial expression of emotion in joint activities

How does emotion expression guide interactive value learning and interactive corrective action selection? Dr. Robert Lowe investigated this and shares his findings in this guest blog post.

Posted by

Published on

Tue 06 Oct. 2020

Topics

| Emotions | FaceReader | Facial Expression Analysis | Human-computer Interaction |

By Robert Lowe, Associate Professor at the Division of Cognition & Communication at the University of Gothenburg, Sweden.

The role of facial expression of emotion in joint activities

Robert Lowe’s group at the University of Gothenburg have been researching the role of processing facial expression of emotion on cognitively demanding tasks. Their focus has been on whether and how emotion expression guides:

- interactive value learning, and

- interactive corrective action selection.

The researchers have carried out different social interaction-based studies on the differential outcomes learning paradigm and an interactive map navigation task requiring corrective action selection. The researchers’ broader interest concerns the use of emotion in joint activities.

Differential outcomes learning

In a recently published article by Rittmo et al. (2020), the researchers adapted an existing psychology-learning paradigm – differential outcomes learning for transfer of response knowledge. Differential outcomes training is utilized in classical stimulus-response training setups where an arbitrary stimulus (e.g. an image of an umbrella, in a computerized task) is followed by different response options (e.g. a left and right response tab to be pressed) where only one such response provides ‘correct’ feedback. Learning is by trial and error. Participants are required to match various stimuli with the different response options in order to receive a rewarding or reinforcing outcome (e.g. image of money).

Unlike the standard behavioural learning approach, differential outcomes training entails the different stimulus-response pairings being followed by differential rewarding outcomes (e.g. presentation of images for: large money reward versus low money reward). A differential outcomes effect – faster learning under differential versus non-differential (same reward for every correct response) outcomes training – has been reliably found across studies (McCormack et al. 2019) using this experimental setup.

Interactive value learning from expressed emotions

The researchers at Gothenburg University performed a novel variant of the differential outcomes procedure in the form of a computerized task in a social setting. This required participants having to learn from videos of an actress (confederate) expressing positive and negative emotions following high or low rewarded instrumental responses (image of large amount of money, or small amount of money, respectively).

Negative emotion, in this case, served as an expression of mild frustration for a relatively low rewarding outcome following the correct response to the previously presented stimulus.

Download here the Free white paper 'Facial Action Coding System (FACS)'Associations between visual stimuli and response options

In the experiment, participants were initially required to learn associations between visual stimuli and response options where different pairings led to different valued (rewarding) outcomes (images of high versus low cash rewards). The participants were then required to observe a confederate participating in the same task but with novel visual stimuli presented.

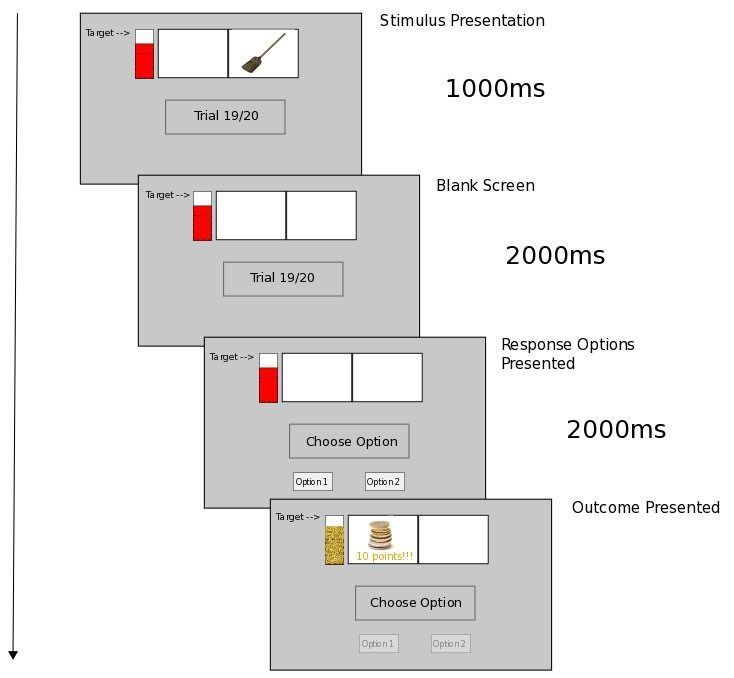

So whereas in initial training the stimuli included images of a broom (on the computer screen) in the observation training an image of a bus might be presented. Below can be seen a trial progression diagram of the initial training.

In the observation training, the trial progressed as for the initial training but now the response options were hidden from the observing participant’s view whilst the video of the actress played throughout the trial.

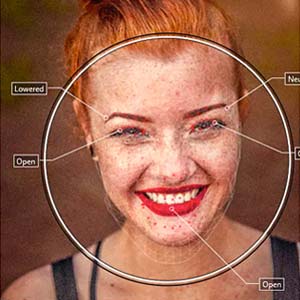

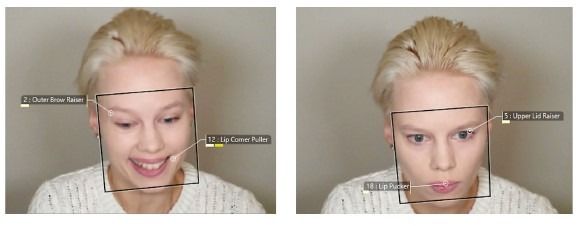

At the presentation of the outcome (also hidden from the observing participant’s view) the actress would smile to illustrate mild frustration as shown below.

The actress was instructed to express emotions naturally. FaceReader was used to evaluate the tractability of the affective valence of the expressions, which was shown to be reliable when assessed for the 20 successive trials used for observation training. In this way the expressed emotion served as a proxy for the high and low valued outcomes from which the participant could potentially learn.

Associative Two-Process Theory

The experiment of Rittmo et al. (2020), evaluated the interactive value learning of participants from the initial training, and then observation training, stages through a test stage. In this test stage, response options were again made visible (as for initial training) to the participants who tended to select the responses ‘chosen’ by the actress in spite of never having seen the actress respond to the particular novel stimuli (in fact she didn’t select responses but merely reacted to a videoed set up).

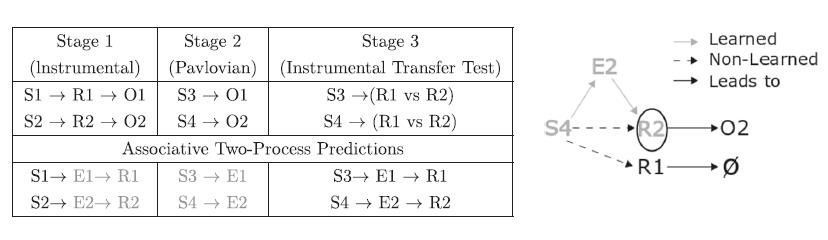

Based on Lowe et al. (2016), participant test stage response selection was predicted according to an adapted (social) version of Associative Two-Process theory (Trapold 1970, Urcuioli 2005). Classic Associative Two-Process is schematized below in relation to our experiment.

On the top row is shown the trial procedure. In Stage 1 can be seen the correct response for a given presented stimulus (e.g. R1 following S1, where R2 is ‘incorrect’ for this trial) and the rewarding outcome that follows (O1 for the S1->R1 pairing), with no reward or a punishing outcome presented when the incorrect response is chosen, e.g. S1->R2->punishment.

In each stage of the experiment, different Stimuli (S1, S2, S3, S4), and Response (R1, R2) options are presented over a number of trials (one stimulus per trial). In Stage 1 and 3 both response options are presented (consistent with the schema above) and the ‘correct’ response leads to a rewarding outcome (O1 or O2), otherwise no reward.

Forming expectations

Associative two-process theory predicts (bottom row of above diagram) that participants form expectations (values) for the different outcomes (E1, E2), in our case for high (O1) and low reward (O2), respectively. These expectations serve as internal stimuli that can be associated with both (external) Stimuli and Responses. In this way, participants may learn that, for example, E2 was associated with R2 (Stage 1), and with S4 (Stage 2).

Thereby, if S4 is presented again in Stage 3, it should associatively cue a representation of E2 that in turn associatively cues a representation of R2. As such, participants are biased to a particular response (R2) in spite of never having been presented in the same trial with the S4-R2 pairing.

Social Associative Two-Process Learning

In Lowe et al. (2016) it is hypothesized that participants who are not competing with another in the joint activity will “place themselves in the other’s shoes” and learn value-based outcome expectations (high versus low reward) of the other as if they were their own. We call this vicarious value learning.

It provides an adaptation of the above-mentioned 3-stage set up. In the classical set-up in Stage 2, participants perceive differential outcomes for each presented novel stimulus (Pavlovian conditioning). Instead, in the adaptation of Rittmo et al. (2020) the participants observe emotion expression from the confederate as a proxy for the actual (now non-visible) outcomes.

This Social Associative Two-Process hypothesizes participants valuating the novel stimuli in Stage 2 as if they were present in the place of the confederate. The participants then used their updated stimulus valuations to select responses using the above-discussed associative transitive inference (in the diagram, by associative transitive inference: S4->E2, E2->R2 gives S4->R2).

Such interactive value learning, based on incomplete information (i.e. the other’s responses are not visible) may be of use during joint activities where interacting agents are required to collaborate on ‘quick-fire’ tasks. Emotional cues transmitting information about how the task is going for another agent might provide a means for circumventing a lengthy trial and error learning process.

This should particularly apply in situations where differential affective states (outcome expectations) constitute a common class for task-related stimuli, i.e. where certain groups of stimuli typically evoke a particular type of response.

Emotions guide corrective responses

Another aspect of observing another’s performance during joint activity concerns monitoring the other and corrective responding, i.e. providing compensatory responses during collaborative tasks when something unexpected occurs. Emotions are said to guide corrective responses in such cases (Oately & Johnson-Laird, 1987, 2014).

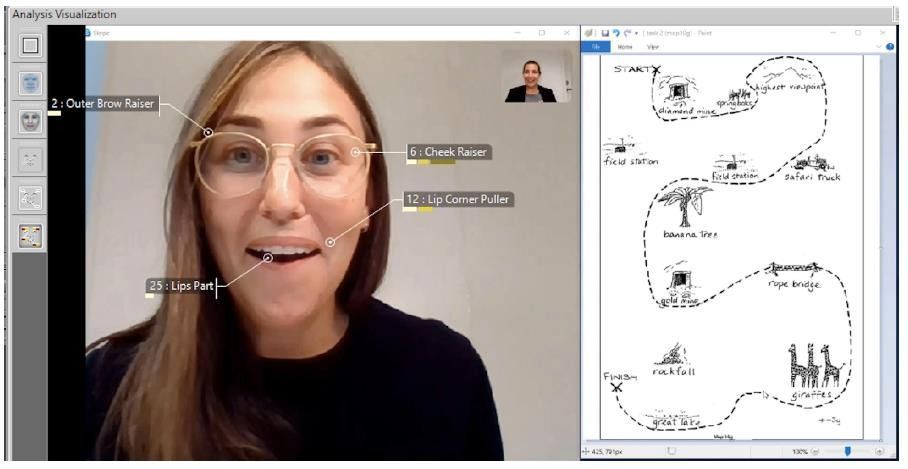

Borges et al. (2019) used a video data set collected at the University of Gothenburg that consisted of instructor-follower dyads engaging in a map navigation task. The task was designed to induce miscommunications between instructor and follower requiring corrective responses (e.g. questions, confirmation statements). An example interaction is given below (as being analysed using FaceReader 8.0) where one participant observes the other (inset) and they are able to audibly communicate.

The focus of the work of the DICE lab research group, however, was on the training of a neural network (Long Short-Term Memory/LSTM) for categorizing states of facial expressions of confusion from the videos.

Within the FaceReader software, confusion is considered as an affective attitude (along with ‘boredom’ and ‘interest’ (Noldus 2019). It is, nevertheless, an affective state whose constituents are not universally accepted and may be specific to the particular task.

The affective attitude confusion

The benefit of being able to automatically detect confusion states from processing facial expressions of emotion, and relatively rapidly, is apparent in studies of Human-Computer Interaction (Lago & Guarin, 2014). If, for example, confusion in a human participant is detected by a software agent, responses to resolve the confusion can be carried out by the agent before the subject becomes disengaged in the activity. This has potentially many applications from joint activities in human-computer interactive tasks to gaming and gamification of cognitive tasks.

In Borges et al. (2019), we trained the LSTM on action unit inputs from the FaceReader analysed videos and derived respectable classification accuracy, precision, and recall relative to ground truth values (inter-rater coded) for Positive, Negative, Neutral and Confusion affective states.

This work we consider promising in the context of Human-Robot interaction tasks we are developing (Lowe et al., 2019) that require the robot to learn vicariously from others (as in Rittmo et al., 2020) while responding to other’s affective states that might disengage them from the joint activity (e.g. as manifested in ‘confusion’).

References

Borges, N.; Lindblom, L.; Clarke, B.; Gander, A. & Lowe, R. (2019). Classifying confusion: Autodetection of communicative misunderstandings using facial action units. 2019 8th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), 401–406. IEEE. https://doi.org/10.1109/ACIIW.2019.8925037.

Lago, P. & Guarin, C.J. (2014). An affective inference model based on facial expression analysis,” IEEE Latin America Transactions, 12(3), 423–429. https://doi.org/10.1109/TLA.2014.6827868.

Lowe, R.; Almér, A.; Gander, P. & Balkenius, C. (2019). Vicarious value learning and inference in human-human and human-robot interaction. 2019 8th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), 395–400. IEEE. https://doi.org/10.1109/ACIIW.2019.8925235.

Lowe, R.; Almér, A.; Lindblad, G.; Gander, P.; Michael, J. & Vesper, C. (2016). Minimalist social-affective value for use in joint action: A neural-computational hypothesis. Frontiers in Computational Neuroscience, 10, 88. https://doi.org/10.3389/fncom.2016.00088.

McCormack, J. C., Elliffe, D. & Virués‐Ortega, J. (2019). Quantifying the effects of the differential outcomes procedure in humans: A systematic review and a meta‐analysis. Journal of applied behavior analysis, 52(3), 870-892. https://doi.org/10.1002/jaba.578.

Noldus. (2019). FaceReader: Tool for automatic analysis of facial expression, Version 8.0 [Software]. Wageningen, The Netherlands: Noldus Information Technology B.V.

Oatley, K. & Johnson-Laird, P.N. (1987). Towards a cognitive theory of emotions. Cognition and emotion, 1(1), 29-50. https://doi.org/10.1080/02699938708408362.

Oatley, K. & Johnson-Laird, P.N. (2014). Cognitive approaches to emotions. Trends in cognitive sciences, 18(3), 134-140. https://doi.org/10.1016/j.tics.2013.12.004.

Rittmo, J.; Carlsson, R.; Gander, P. & Lowe, R. (2020). Vicarious Value Learning: Knowledge transfer through affective processing on a social differential outcomes task. Acta Psychologica, 209, 103134. https://doi.org/10.1016/j.actpsy.2020.103134.

Trapold, M.A. (1970). Are expectancies based upon different positive reinforcing events discriminably different? Learning and Motivation, 1, 129–140. https://doi.org/10.1016/0023-9690(70)90079-2.

Urcuioli, P.J. (2005). Behavioral and associative effects of differential outcomes in discrimination learning. Animal Learning & Behavior, 33, 1–21. https://doi.org/10.3758/BF03196047.

Related Posts

3 Examples of pattern detection research

Facial expression analysis in video content marketing