5 tips on how to measure facial expressions

It may sound very simple, but recording video and playing it back enables more detailed analysis of facial expressions. 5 useful tips on how to measure facial expressions!

Posted by

Published on

Tue 09 Aug. 2022

Facial expressions are an important form of non-verbal communication, used by humans and some animals. They convey personal emotions and are often analyzed by researchers who study human behavior. But how to measure facial expressions? We share 5 tips!

What are facial expressions?

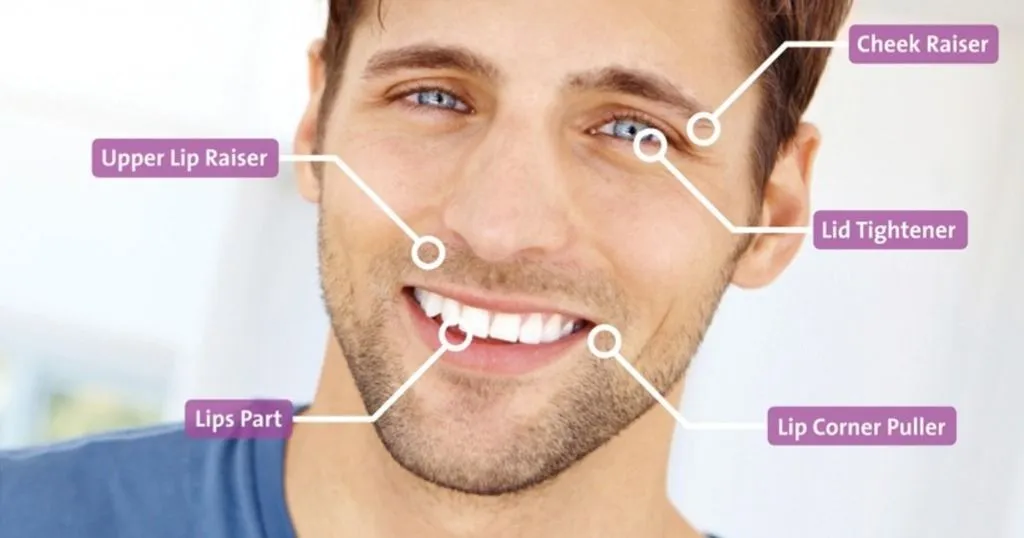

First of all, you might wonder what facial expressions are. They are a non-verbal behavior, created by muscle movements beneath the skin of the face. For researchers that study human behavior, emotions can provide crucial insights into why people do what they do.

Facial expressions can be measured and analyzed in several ways. One way is to observe a person and manually code any facial expressions. Another way is by automated facial expression analysis, using a scientific software tool like FaceReader.

FaceReader cannot be used for identifying people, but it can recognize facial characteristics and expressions, and classify facial movements like head orientation, or whether a person has their eyes open or closed.

5 tips on how to measure facial expressions

Are you working with a tool like FaceReader and do you want to know how to measure facial expressions? We share 5 useful tips for the automated measuring of facial expressions.

1. Make video recordings

It may sound very simple, but recording video and playing it back enables more detailed analysis of facial expressions. When only annotating events and facial expressions live without recording it on video, it is likely the annotator may miss essential information. When making video recordings, it can still be difficult to record the face.

For example, Forestell and Mennella recorded infants as their mothers fed them green beans in order to objectively quantify infants’ facial expressions by manually coding Action Units. In this study 16 mother-infant dyads had to be excluded because the infant’s face was partially or fully occluded during the feeding session. Fortunately, the researchers had a final sample of 92 dyads, which was sufficient to finish their study.

Investigating facial expressions in autism and borderline personality disorder

Video recordings of the reactive facial expressions of participants have also been used with people with autism spectrum disorder (ASD) or borderline personality disorder (BPD).

In a study of De Meulemeester et al. (2021), the capacity for transparency estimation in individuals high and low in BPD features was investigated by presenting a series of emotion-eliciting movie clips to the participants, while filming their reactive facial expressions.

After each clip, participants estimated how transparent their emotional experience was while watching the particular clip. In addition, the observable transparency (i.e. how observable their mental states in fact are to others) of the participants’ emotional experience was defined as the actual intensity of their facial emotional expressions, measured via FaceReader software.

2. Use a stationary microphone and chair

Platt et al. gave a great tip on how to keep a participant from moving/ shaking/ turning his or her head. They placed a voice-recording instrument in front of the participant and asked the participant to speak in the direction of the device.

The researchers explained that this limited the participant from turning to face the interviewer. He/she only turned to the interviewer to exchange information and these parts were irrelevant for the study and therefore later excluded.

Furthermore, one can easily imagine what happens when a participant sits on an office chair. Therefore, Platt et al. fixed the chair and table so that no turning was possible.

In short, this study was aimed at identifying whether individuals with a fear of being laughed at (gelotophobia) respond with less facially displayed joy (Duchenne display) generally towards enjoyable emotions or only those eliciting laughter.

3. Ensure good illumination of the participant’s face

Whether working in a laboratory or on-site, adequate illumination is of great importance. This is especially true when using software such as FaceReader to recognize facial expressions because good lighting can increase the reliability of your results.

For example, Danner et al. used FaceReader software to automatically analyze facial expressions of participants drinking fruit juice. Their set up provided sufficiently accurate data to detect significant differences in facial expressions elicited by different orange juice samples.

A set up can be optimized by using photo lamps. This results in diffused frontal lighting on the test participant. Strong reflections or shadows (for example caused by lights from the ceiling) should be avoided.

Read this article for more tips to optimize your facial expression analyses

4. Choose a lower frame rate for analysis

When using the software to analyze videos, make sure to select a frame rate that suits your research needs. A lower frame rate will allow for a faster analysis while still providing the results you need. Gorbunov used FaceReader software to analyze every third frame of the video.

They explain that they chose this frame rate in order to reduce the computational time needed for the generation of the data describing facial expressions in a quantitative way, and the computational time required for the analysis of these data. In this particular case facial expressions were measured in the Mars-500 isolation experiment.

In his dissertation, Gorbunov explains that the chosen frame rate still enabled them to get smooth dependencies describing the facial expressions.

5. Record context

Now you know how to measure facial expressions, it is important to look at the context of behavior. You can choose to include more measurements in your study, such as eye tracking, physiological measurements, and screen capture technology.

Answer for example this question: at what user interface is the test participant looking or which events occurred in the room while the facial expressions recognized as ‘happy’ were recorded?

Taking the context into account can complement the strengths of facial expression analysis by revealing the environmental cues and context that shape facial expressions and emotions.

References

- Danner, L.; Sidorkina, L.; Joechl, M.; Duerrschmid, K. (2013) Make a face! Implicit and explicit measurement of facial expressions elicited by orange juices using face reading technology. Food Quality and Preference, 32, 167-172. doi:10.1016/j.foodqual.2013.01.004

- De Meulemeester, C., Lowyck, B., Boets, B., Van der Donck, S. & Luyten, P. (2021). Do my emotions show or not? Problems with transparency estimation in women with borderline personality disorder features. Personality Disorders: Theory, Research, and Treatment, 13(3), 288-299. doi.org/10.1037/per0000504.

- Forestell, C.A.; Mennella, J.A. (2012). More than just a pretty face. The relationship between infant’s temperament, food acceptance, and mothers’ perceptions of their enjoyment of food. Appetite, 58, 1136-1142.

- Gorbunov, R. (2013). Monitoring emotions and cooperative behavior. Eindhoven: Technische Universiteit Eindhoven. ((Co-)promot.: prof.dr. G.W.M. Rauterberg, dr.ir. E.I. Barakova & dr. K.P. Tuyls).

- Platt, T.; Hofmann, J.; Ruch, W.; Proyer, R.T. (2013). Duchenne display responses towards sixteen enjoyable emotions: Individual differences between no and fear of being laughed at. Motivation and Emotion, 37, 776-786. doi.org/10.1007/s11031-013-9342-9.

Related Posts

Do emotions affect preferences to opera music?

Must-see TED Talks on emotions