How FaceReader is validated in research

In this blog post, we'll dive deeper into the validity and different applications of FaceReader—the most robust tool for automated facial expression analysis.

Posted by

Published on

Wed 26 Jun. 2024

Topics

| Action Units | Behavioral Research | Emotions | FaceReader | Facial Expression Analysis |

From psychology to education or consumer behavior: facial expressions provide researchers with valuable insights into human emotions. Yes, you can use surveys or interviews to ask people about what they feel—but we're often not able to recognize or explain exactly what we feel at that moment.

Facial expressions, on the other hand, reveal our immediate and unconscious emotional reactions. And with the latest tools, you can measure facial expressions quickly and objectively. In this blog post, we'll dive deeper into the validity and different applications of FaceReader—the most robust tool for automated facial expression analysis.

What can you measure with FaceReader?

FaceReader measures the six basic emotions as proposed by Ekman1: happiness, surprise, anger, fear, disgust, and sadness. The software is also able to recognize contempt and a neutral state, and provides information on gaze direction and head orientation.

You'll also find measurements of Action Units (AUs), which describe muscle movements in the face. Moreover, FaceReader shows you how positive or negative an emotion is (valence), as well as the level of activity or alertness (arousal). These can be visualized in FaceReader as a circumplex model, as described in Russell’s theory of emotion2.

For additional options, FaceReader offers modules to analyze your data in more detail, create custom expressions, measure heart rate and heart rate variability, and analyze eating and drinking behavior. You can also choose Baby FaceReader for research with children between 6 and 24 months old, or go for greater flexibility in data collection with FaceReader Online.

Dr. Erin Heerey from Western University explains how FaceReader has not only increased throughput, accuracy, and ease of scoring social behaviors, but has also made it possible to share this data more easily amongst other researchers. Watch the video to learn more about her research.

How researchers determine FaceReader's accuracy

With all of these options for automated analysis available, it's important to know if it actually works. How accurate is FaceReader in determining facial expressions? Luckily, FaceReader has been validated extensively by different parties and researchers—unlike a lot of other facial expression analysis tools in the market, both freeware and paid tools.

To examine FaceReader's validity, these researchers compared the results of the software with that of trained human coders. Another way to study accuracy is to compare FaceReader's results with the intended expressions of existing databases.

Examples of these databases are the Amsterdam Dynamic Facial Expression Set (ADFES)3, Radboud Faces Database (RaFD)4, and Warsaw Set of Emotional Facial Expression Pictures (WSEFEP).5 They consist of highly standardized images of emotional expressions from people with different ethnicities.

Accuracy of classification

Let's look at a couple of studies that focus on FaceReader's accuracy in determining the basic emotions.

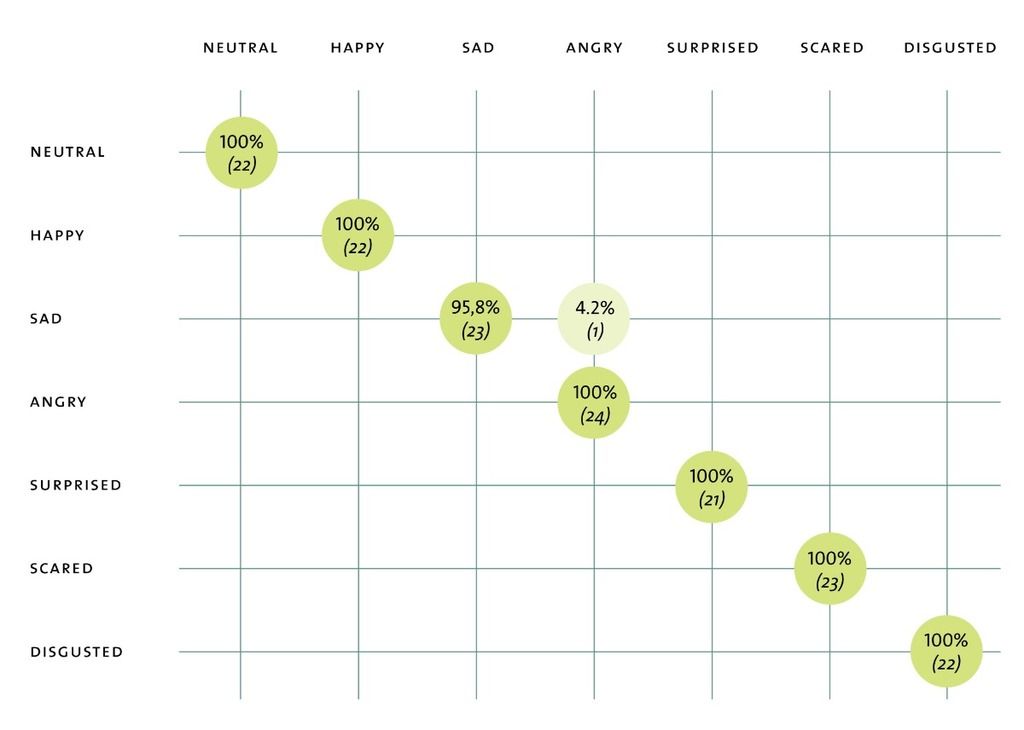

- In a comparison between results of FaceReader 9 and the ADFES dataset, available in our FaceReader Methodology note, you can see that FaceReader determines the basic emotions very accurately.

- Researcher Stöckli and her colleagues found that FaceReader was 88% accurate, while iMotion's AFFDEX algorithm only gained 70% accuracy in determining facial expressions6.

- Researcher Lewinski and his colleagues studied the validity of FaceReader 6 against the WSEFEP and ADFES databases7. They found an average accuracy rate of 88%. FaceReader performed best when determining happiness (96%) and faced most challenges with recognizing anger (76%).

- A systematic literature review by researcher Landmann showed accuracy rates for FaceReader between 80 and 90 percent, including different FaceReader versions8. As Landmann puts it: "Since, in the conducted studies, such mappings by humans achieved maximally equally good results, it can be assumed that FaceReader evaluations do not require any additional correction by humans and even outperform humans in some cases."

RESOURCES: Read more about FaceReader

Find out how FaceReader is used in a wide range of studies and how it can elevate your research!

- Free white papers

- Customer success stories

- Featured blog posts

How accurately does FaceReader measure Action Units?

There are also several studies examining FaceReader's accuracy in detecting Action Units (AUs).

- When examining the accuracy of FaceReader Action Units, Gudi and Ivan looked at agreement scores between human coders and the FaceReader software.9 On average, they found an agreement score of 0.81, indicating that FaceReader provides reliable results. Looking more closely, they found agreement scores between 0.79 and 0.95 for most AUs.

- The same researchers also compared Action Unit results from Baby FaceReader with those of human coders and found an average agreement score of 0.83, with only two AUs performing under 0.80. These results show that FaceReader is also a reliable tool for facial expression analysis with infants.

- Zaharieva and her team studied AU classifications in Baby FaceReader as well10. They found that automated detection of smiling (AU12) worked well in discriminating positive from negative or neutral facial expressions. Similarly, brow lowering (AU3 + AU4) performed best when distinguishing neutral from negative expressions.

- In the study by Lewinski that we discussed above, the team also studied FaceReader 6 accuracy in determining AUs. They found that FaceReader detects AUs 1, 2, 4, 5, 6, 9, 12, 15, and 25 with high accuracy, making them suitable to use in research.

Why you should choose automated facial expression analysis

Automated facial expression analysis is fast, objective, and reliable. FaceReader is highly accurate in determining facial expressions and offers researchers a wealth of options when collecting or analyzing their data. It allows you to collect more detailed emotion data—in less time.

References

1. Ekman, P. (1992). An argument for basic emotions. Cognition & emotion, 6(3-4), 169-200. https://doi.org/10.1080/02699939208411068

2. Scarantino, A. (2015). Basic emotions, psychological construction, and the problem of variability. In L.F. Barrett & J.A. Russell (Eds.), The psychological construction of emotion (pp. 334–376). The Guilford Press. https://psycnet.apa.org/record/2014-10678-014

3. Van der Schalk, J.; Hawk, S.; Fischer, A.; Doosje, B. (2011). Moving faces, looking places: Validation of the Amsterdam Dynamic Facial Expression Set (ADFES). Emotion, 11(4), 907–920. https://doi.org/10.1037/a0023853

4. Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.; Hawk, S.; van Knippenberg, A. (2010). Presentation and validation of the Radboud Faces Database. Cognition & Emotion, 24(8), 1377—1388. http://dx.doi.org/10.1080/02699930903485076

5. Olszanowski, M.; Pochwatko, G.; Kuklinski, K.; Scibor-Rylski, M.; Lewinski, P.; Ohme, R. (2014). Warsaw set of emotional facial expression pictures: a validation study of facial display photographs. Frontiers in Psychology, 5. https://doi.org/10.3389/fpsyg.2014.01516

6. Stöckli, S.; Schulte-Mecklenbeck, M.; Borer, S.; Samson, A. (2017). Facial expression analysis with AFFDEX and FACET: A validation study. Behavior Research Methods, 50, 1446-1460. https://doi.org/10.3758/s13428-017-0996-1

7. Lewinski, P.; den Uyl, T.; Butler, C. (2014). Automated Facial Coding: Validation of Basic Emotions and FACS AUs in FaceReader. Journal of Neuroscience, Psychology, and Economics, 4, 227-236. http://dx.doi.org/10.1037/npe0000028

8. Landmann, E. (2023). I can see how you feel - Methodological considerations and handling of Noldus's FaceReader software for emotion measurement. Technological Forecasting & Societal Change, 197. https://doi.org/10.1016/j.techfore.2023.122889

9. Gudi, A.; Ivan, P. (2022). Validation Action Unit Module [White paper]. Available at Noldus Information Technology upon request.

10. Zaharieva, M.; Salvadori, E.; Messinger, D.; Visser, I.; Colonnesi, C. (2024). Automated facial expression measurement in a longitudinal sampleof 4 and 8 month olds: Baby FaceReader 9 and manual coding of affective expressions. Behavior Research Methods. https://doi.org/10.3758/s13428-023-02301-3

Related Posts

Scientific methods in human behavior research

Why The Observer XT is the gold standard for behavioral research