How to master automatic Facial Expression Recognition

Many researchers have turned towards using automated facial expression recognition software to better provide an objective assessment of emotions.

Posted by

Published on

Thu 30 Aug. 2018

Emotions can be both a gift and a curse. They are what makes us human, and we all experience them – whether it is through the ability to feel love and happiness, or pain and suffering. In addition, they are difficult to hide. All emotions, whether they are suppressed or not, are likely to have a physical effect. However, studying emotions can be hard, since it is not often clear how to exactly interpret them.

Automatic facial expression recognition

For this reason, many researchers have turned towards using automated facial expression recognition software to better provide an objective assessment of emotions. Because human assessment of emotions has many limitations and biases, facial expression technology can be used to deliver a greater level of insight into behavior patterns.

This video shows FaceReader results.

Noldus’ FaceReader software is one example of a tool that is specifically designed for this purpose, and is used in human behavior research for applications such as psychological research, usability studies, and advertising and consumer behavior.

Classifying facial expressions and emotions

The American psychologist Paul Ekman found that some facial expressions are universal, and can be reliably measured in different cultures. FaceReader bases its algorithms on these original basic expressions, and can automatically determine the presence and intensity of the following emotions: Happy, Sad, Angry, Surprised, Scared, Disgusted, and Neutral (no emotion). It can also analyze interest, boredom, and confusion, which are three commonly occurring affective attitudes.

The software works by following these consecutive steps:

- Face Finding – FaceReader finds an accurate position of the face using the popular Viola-Jones algorithm

- Modeling – An accurate 3D modeling of the face is made, which describes over 500 key points

- Classification – An artificial neural network uses over 10,000 pictures to classify the basic emotional expressions and a number of properties

- Deep face classification – This method allows FaceReader to directly classify the face from image pixels using an artificial neural network to recognize patterns. This allows the software to analyze the face even if a part of it is hidden

Types of input sources commonly used with FaceReader include video analysis, live analysis using a webcam, and still images. Once classified, emotions can be represented as line and/or bar graphs as well as in a pie chart, which shows the percentage per emotion.

In addition, FaceReader can also calculate gaze direction, head orientation, and characteristics such as gender, and age.

Action unit analysis

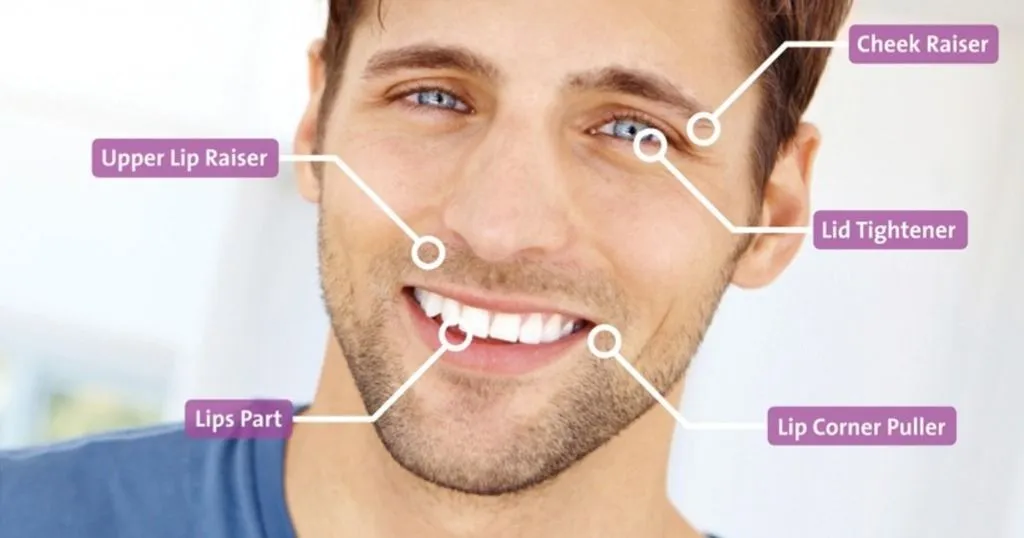

Automated facial coding can be further extended to an action unit level, according to the FACS (Facial Action Coding System). To backtrack a little, the FACS is a facial expression coding system meant for measuring facial expressions and describing all observable facial movement. The movements of individual facial muscles are broken down into specific action units. FaceReader’s Action Unit Module is capable of analyzing 20 of these action units, including Cheek Raiser, Nose Wrinkler, and Dimpler.

Action Unites are responsible for facial expressions.

Fields of research using facial expression detection

Because FaceReader can serve as an automated, non-intrusive measure of engagement, it can be used in many applications. Some notable examples of fields using facial expression research include:

- Ad testing and consumer behavior

FaceReader can be used to examine metrics such as consumer interest in a product and the effectiveness of video advertisements. Emotional responses to advertisements tend to have a heavy influence on consumers’ intentions to buy a product – using facial coding analysis, researchers could perform testing and present evidence that a certain expression (ex. Happy) may predict an advertisement’s success or a positive shift in attitude towards the brand. - Usability testing

In order to understand how real users experience a website or application, researchers can use facial expression technology to analyze participants’ facial expressions during testing. This helps to analyze how participants feel as they navigate through a site, and could clarify which tasks are frustrating or enjoyable, as well as what needs to be improved upon. - Psychological research

If you have an interest in better understanding the emotions and communication between people, you can benefit greatly from a more in depth understanding of facial expressions. Some examples of interactions that could be studied with FaceReader include those between parents and children, teachers and students, businesspeople and negotiators, and health professional and patients.

Would you like to learn more?

For more information, please feel free to contact us to speak with a Sales Consultant about how FaceReader can help assist you with your research. In addition, you can download a free whitepaper about FaceReader’s methodology. We look forward to working with you!

FREE WHITE PAPER: FaceReader methodology

Download the free FaceReader methodology note to learn more about facial expression analysis theory.

- How FaceReader works

- More about the calibration

- Insight in quality of analysis & output

More information on these websites

- https://noldus.com/facereader

- http://www.usabilityfirst.com/usability-methods/usability-testing/

- http://www.apa.org/science/about/psa/2011/05/facial-expressions.aspx

- https://en.wikipedia.org/wiki/Facial_Action_Coding_System

- https://www.insightsassociation.org/article/facial-recognition-market-research-next-big-thing

- https://en.wikipedia.org/wiki/Paul_Ekman

Selection of scientific publications

- Danner, L.; Sidorkina, L.; Joechl, M.; Duerrschmid. (2014) Make a face! Implicit and explicit measurement of facial expressions elicited by orange juices using face reading technology. Food Quality and Preference, doi:10.1016/j.foodqual.2013.01.004.

- D'Arcey, T.; Johnson, M.; Ennis, M. (2012). Assessing the validity of FaceReader using facial electromyography. Proceedings of APS 24th annual meeting.

- D'Arcey, J.T.; Johnson, M.R.; Ennis, M.; Sanders, P.; Shapiro, M.S. (2013). FaceReader's assessment of happy and angry expressions predicts zygomaticus and corrugator muscle activity. Poster presentation VIII-014 Association for Psychological Science meeting, 23-26 May 2013.

- den Uyl, M.J.; van Kuilenburg, H. (2005). The FaceReader: Online facial expression recognition. Proceedings of Measuring Behavior 2005 (Wageningen, 30 August – 2 September 2005), pp. 589-590.

- Gudi, A.; Tasli, H.E.; den Uyl T. M. & Maroulis, A. (2015). Deep Learning based FACS Action Unit Occurrence and Intensity Estimation. Automatic Faces and Gesture Recognition (FG), doi: 10.1109/FG.2015.7284873.

- Lewinski, P.; den Uyl, T.M.; Butler, C. (2014). Automated facial coding: validation of basic emotions and FACS AUs in FaceReader. Journal of Neuroscience, Psychology, and Economics, 7(4), 227-236.

- Van Kuilenburg, H.; Wiering, M; Den Uyl, M.J. (2005). A Model Based Method for Automatic Facial Expression Recognition. Proceedings of the 16th European Conference on Machine Learning, Porto, Portugal, 2005, pp. 194-205, Springer-Verlag GmbH.

Related Posts

3 Examples of pattern detection research

FaceReader and different scientific theories on emotion